How to input LIDAR data in ArcGIS

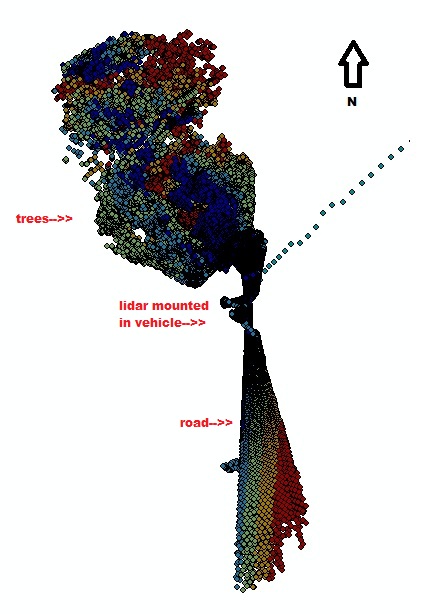

While I have been so problematic before how to resample my billions of points to have it visualized in 3D and further perform analysis over it, I found out that ArcGIS would be the best solution. The usual discretization is performed on my data, I scraped out all the noisy points by limiting my range to 5cm o 100m. Now I added another criterion, that is to remove all points below my LIDAR setup. That is to consider theta(horizontal FOV)<90 and theta >270 degrees. That in a way reduced my point cloud into almost 30% of the original. Memory-wise, it was a LUXURY already! Now for checking the irregularities in the observed point cloud, using the Point File Information Tool is by far the best solution. Using ArcGIS 10.0: 1. Go to 3D analayst tools>Conversion>From File> Point File Information. 2. You will be opt to browse lidar point cloud by File or by Folder. (I suggest that you browse by Folder if you've got more than 10 files.) 3. Now you will be opt for the f